Great news! The good people behind the Webmin project have released their free (as in beer and speech) version of Cloudmin with support for Linux KVM hypervisor.

This is so cool. Can't wait to try it out!

http://webmin.com/cloudmin.html

http://webmin.com/cinstall-kvm.html

-

Archive

Tag List

- .ro (1)

- 2012 (1)

- 32bit (1)

- 4bit (1)

- 6.3 (1)

- 6.5 (1)

- 6.6 (1)

- acs (1)

- ad (1)

- ahci (1)

- alert (1)

- android (1)

- anger (1)

- animals (1)

- anime (4)

- antena3 (1)

- antispam (1)

- apache (6)

- arnej (1)

- artica (1)

- ata (1)

- atlassian (1)

- awesome (1)

- b43 (1)

- bash (2)

- bbc (2)

- bcm43 (1)

- benchmark (2)

- bgp (1)

- bind (2)

- bios (1)

- bitdefender (1)

- bittorrent (2)

- blade runner (1)

- boot (1)

- bootloader (1)

- borg (1)

- broadband (1)

- broadcom (1)

- bruxelles (1)

- bsg (1)

- bt (2)

- btinfinity (1)

- bucium (1)

- build16 (1)

- bunnies (1)

- cache (1)

- caching (1)

- catalyst (1)

- centerim (1)

- centos (43)

- centos 6 (1)

- cisco (1)

- cli (3)

- clone (2)

- cloud (4)

- cloudmin (1)

- cloudstack (7)

- colin percival (1)

- confluence (1)

- copyright (1)

- copyright infringement (1)

- corruption (1)

- cosmos (1)

- cp (1)

- cpanel (2)

- cperciva (1)

- curvedns (1)

- cute (1)

- cve-2010-3081 (1)

- cyanogen (1)

- cyanogenmod (1)

- davmail (1)

- debian (2)

- dedicated servers (1)

- dell (2)

- deltarpm (1)

- desktop (3)

- desktop linux (1)

- dexter (1)

- diaspora (1)

- die (1)

- distributed filesystems (1)

- djbdns (1)

- dns (9)

- dnscurve (1)

- documentary (1)

- dolphins (1)

- domu (1)

- download (1)

- drivers (1)

- drm (1)

- dstat (1)

- dumpe2fs (1)

- el (3)

- el clone (1)

- el6 (21)

- elastix (1)

- elrepo (2)

- elvish (1)

- email (4)

- empathy (1)

- enlefko (1)

- epic (1)

- ergo proxy (1)

- ergonomic (1)

- exchange (1)

- experiment (1)

- exploit (1)

- ext (1)

- ext4 (1)

- fail (29)

- faith in humanity restored (1)

- fallocate (1)

- fancybox (1)

- fedora (7)

- ffmpeg (1)

- film (4)

- firefox (2)

- flash (2)

- fonts (1)

- food (1)

- fork (2)

- fosdem (1)

- fpaste (1)

- france (1)

- free (1)

- freebsd (4)

- freedom (2)

- freerdp (1)

- fuck this shit (6)

- funny (1)

- gallery (1)

- gay (1)

- get_iplayer (1)

- ghost in the shell (1)

- glusterfs (1)

- godaddy (1)

- gpt (1)

- grep (1)

- grub (1)

- hackitat (1)

- hacktivism (1)

- hdsentinel (1)

- hello (1)

- hetzner (1)

- holiday (1)

- honeyroot (1)

- howto (3)

- httpd (2)

- humour (1)

- hvm (1)

- icann (1)

- im (2)

- imagemagick (1)

- imdb (1)

- intel (2)

- internet (5)

- io (1)

- iodine (1)

- ip (2)

- iplayer (1)

- isp (1)

- ivybridge (1)

- jabber (1)

- javascript (2)

- kernel (4)

- kernel-lt (1)

- kernel-ml (1)

- keyboard (1)

- kfreebsd (1)

- kvm (8)

- labplot (1)

- ldap (1)

- libreoffice (5)

- libreoffice.org (4)

- libva (1)

- linus (1)

- linux (63)

- linux raid (1)

- lsi (1)

- lts (3)

- mageia (1)

- mailpile (1)

- management (1)

- mandrake (1)

- mandriva (8)

- marathon (3)

- mariadb (1)

- market place (1)

- marketplace (1)

- memory (2)

- microsoft (1)

- milgram (1)

- miyazaki (1)

- mod_autoindex (1)

- mod_proxy (1)

- mod_security (1)

- mod_substitute (1)

- mongrel (1)

- mp3 (1)

- mplayer (1)

- music (4)

- mysql (1)

- ndjbdns (1)

- nested (1)

- nice (1)

- noscript (1)

- notes (8)

- ntop (1)

- nux (1)

- nx (1)

- o'reilly (1)

- offtopic (1)

- ogg (1)

- oom (1)

- open source (1)

- openoffice (1)

- openvm (1)

- openvpn (1)

- optical illusion (1)

- oracle (1)

- p2p (1)

- pac (1)

- parallels (1)

- paris (1)

- partition table (1)

- partitions (2)

- password (1)

- paste (1)

- pdnsd (1)

- phone (1)

- php (3)

- picasa (1)

- plesk (1)

- plf (1)

- policykit (1)

- politics (1)

- poodle (1)

- power (1)

- power usage (1)

- poweradmin (2)

- powerdns (2)

- pppoe (1)

- privacy (1)

- proxy (1)

- pxe (2)

- python (1)

- r210 (1)

- radio (2)

- raid (1)

- random (1)

- rbl (1)

- rdesktop (1)

- realhostip.com (1)

- reboot (1)

- reddit (1)

- redhat (4)

- redhat 6 (1)

- rels (1)

- remix (1)

- remount (1)

- repo (8)

- review (1)

- rhel (10)

- rhel 6 (1)

- rhel clone (1)

- ringtone (1)

- romania (2)

- rosa (5)

- routing (2)

- rpm (13)

- rsa (1)

- rtmpdump (1)

- ruby (3)

- russian (1)

- samba (1)

- sandy bridge (1)

- sandybridge (1)

- sas (1)

- sata (1)

- science (1)

- scientific linux (1)

- scientific linux 6 (1)

- scientificlinux (5)

- scientificlinux 6 (1)

- scientifixlinux (1)

- scifi (1)

- securecrt (1)

- security (4)

- security office (1)

- security.bsd.see_other_uids (1)

- selinux (2)

- shell (1)

- shit (2)

- shutdown (1)

- shutter (1)

- skype (3)

- small (1)

- smart (1)

- smartctl (2)

- smartphone (1)

- software raid (1)

- sopa (1)

- spamhaus (1)

- spotify (1)

- springdale (1)

- ssl (1)

- sslv3 (1)

- stage1 (1)

- startrek (2)

- static (1)

- stella (10)

- stupid (1)

- superevil (1)

- surbl (1)

- swap (1)

- swappiness (1)

- tcp (1)

- template (1)

- templates (1)

- testing (1)

- the big bang theory (1)

- the secret of blue water (1)

- time (1)

- tinydns (1)

- tip (1)

- trance (2)

- transmission (1)

- truncate (1)

- tulnic (1)

- turktelecom (1)

- twitter (1)

- ubuntu (2)

- vaapi (1)

- varnish (1)

- vim (1)

- virginmedia (1)

- virt (4)

- virtio (1)

- virtualisation (2)

- virtualmin (1)

- vnc (2)

- vpn (1)

- webmin (2)

- whois (1)

- wileyfox (1)

- windows7 (1)

- women (1)

- x86_64 (1)

- xen (2)

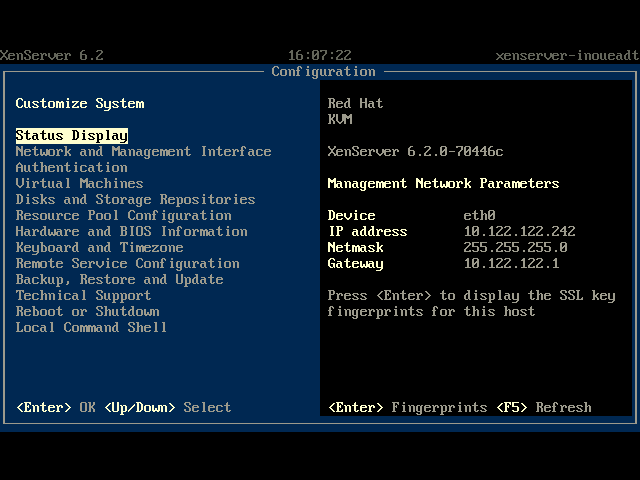

- xenserver (1)

- xmas (1)

- xmpp (1)

- yahoo (1)

- yum (7)

- zarb (1)

- zfs (1)

- zfsonlinux (1)

RSS Feed

Tools

Show the IP addressRandom password generator

Projects

Repos - My RPM repositories for CentOS/(RH)ELLinux LiveCDs - My CentOS based remixes

OpenVM.EU - Linux templates & appliances for Cloudstack

Links

ManuDavid