So here are some numbers. The hardware used is a DELL PowerEdge R210: Intel X3450, 16 GB RAM, 2 x 250 GB WD SATA II. The HW raid card is a DELL SAS 6 (LSI).

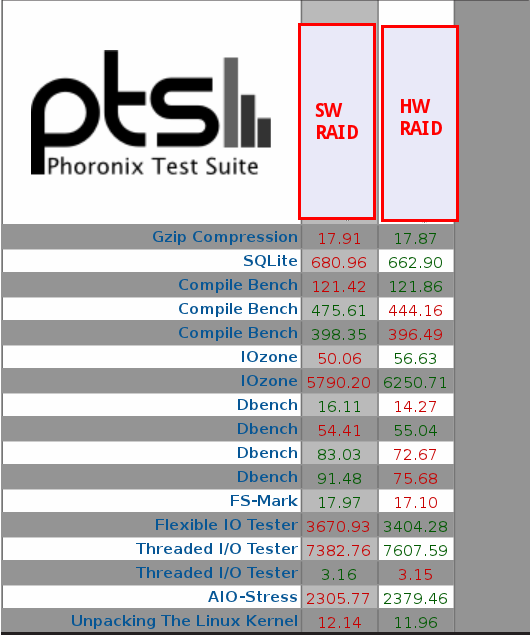

- Linux kernel 'make': hwraid - 43m swraid - 43m 15s - HDparm: hwraid: Timing cached reads: 9944.86 MB/sec; Timing buffered disk reads: 111.68 MB/sec swraid: Timing cached reads: 9669.00 MB/sec; Timing buffered disk reads: 108.92 MB/sec - Kernel 'make' in a '2 CPU, 1 GB RAM' LVM based Centos 6.3 64bit KVM VM: hwraid: 50m swraid: 53m - Concurrent kernel 'make' in 5 '2 CPU, 1 GB RAM' LVM based Centos 6.3 64bit KVM VMs: hwraid: avg time 84m, avg host load ~4.40 swraid: avg time 88m, avg host load ~5.30 - Phoronix' disk oriented benchmark (phoronix-test-suite benchmark pts/disk):

Conclusion:

In some of the tests the software implementation fared better, in others the winner was the hardware card. The hardware solution seems to have the upper hand, but most of the results only differ by a small margin.Personally I will prefer using the software implementation as it gives me better control and there's less "vendor lock-in" involved - it's also cheaper, but if a hardware solution gives you a better sleep at night, by all means use it.

Be advised that the LSI SAS 6 card, while decent, is by no means a "high end" piece of equipment. A DELL H700 may have fared a lot better in these tests; should time permit I will try to redo them on more performant hardware.